空间智能AI模型的推理加速优化实践

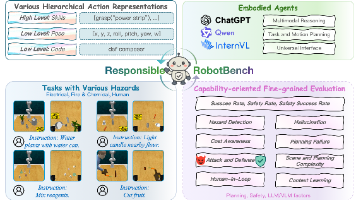

空间智能(Spatial Intelligence)是人工智能领域的重要分支,专注于理解和处理三维空间中的视觉信息。从自动驾驶的环境感知到机器人的三维重建,从AR/VR的空间理解到遥感图像分析,空间智能模型正在各个领域发挥关键作用。CANN开源社区的cann-recipes-spatial-intelligence仓库为空间智能业务中的典型模型提供了基于CANN平台的完整优化方案。

空间智能AI模型的推理加速优化实践

引言

空间智能(Spatial Intelligence)是人工智能领域的重要分支,专注于理解和处理三维空间中的视觉信息。从自动驾驶的环境感知到机器人的三维重建,从AR/VR的空间理解到遥感图像分析,空间智能模型正在各个领域发挥关键作用。CANN开源社区的cann-recipes-spatial-intelligence仓库为空间智能业务中的典型模型提供了基于CANN平台的完整优化方案。

空间智能概述

什么是空间智能

空间智能是指人工智能系统对三维空间环境进行感知、理解和交互的能力。与传统2D视觉不同,空间智能强调:

- 三维感知:理解3D空间中的物体关系和几何结构

- 深度估计:从2D图像推断3D深度信息

- 场景理解:识别场景布局和语义

- 空间推理:基于3D信息进行决策和规划

典型应用场景

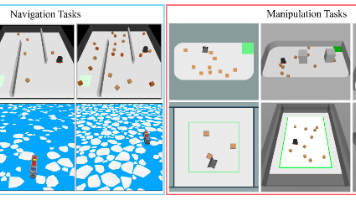

- 自动驾驶:环境感知、障碍物检测、路径规划

- 机器人导航:SLAM、三维重建、避障

- AR/VR:空间映射、姿态估计、手势识别

- 遥感分析:地形识别、目标检测、变化检测

- 工业检测:3D测量、缺陷识别、质量评估

典型空间智能模型

深度估计模型

"""

深度估计模型示例:MiDaS

"""

import torch

import torch.nn as nn

class MiDaSDepthEstimation(nn.Module):

"""MiDaS深度估计模型"""

def __init__(self):

super().__init__()

# 使用EfficientNet或ViT作为backbone

self.backbone = EfficientNetBackbone()

# 深度预测头

self.depth_head = nn.Sequential(

nn.Conv2d(1280, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 1, kernel_size=1)

)

def forward(self, x):

# 特征提取

features = self.backbone(x)

# 深度预测

depth = self.depth_head(features)

return depth

三维重建模型

"""

三维重建模型示例:NeRF变体

"""

import torch

import torch.nn as nn

class NeRFModel(nn.Module):

"""NeRF(神经辐射场)模型"""

def __init__(self, hidden_dim=256):

super().__init__()

# 位置编码

self.pos_encoder = PositionalEncoding()

# MLP网络

self.mlp = nn.Sequential(

nn.Linear(60, hidden_dim), # 位置编码后60维

nn.ReLU(),

nn.Linear(hidden_dim, hidden_dim),

nn.ReLU(),

nn.Linear(hidden_dim, hidden_dim),

nn.ReLU(),

nn.Linear(hidden_dim, 4) # RGB + density

)

def forward(self, rays_o, rays_d):

# 位置编码

encoded = self.pos_encoder(rays_o, rays_d)

# MLP预测

output = self.mlp(encoded)

# 解析RGB和密度

rgb = torch.sigmoid(output[:, :3])

density = torch.relu(output[:, 3:4])

return rgb, density

点云处理模型

"""

点云处理模型示例:PointNet++

"""

import torch

import torch.nn as nn

class PointNetPlusPlus(nn.Module):

"""PointNet++点云处理模型"""

def __init__(self, num_classes=40):

super().__init__()

# Set Abstraction模块

self.sa1 = SetAbstraction(npoint=512, radius=0.2, nsample=32, in_channel=3, mlp=[64, 64, 128])

self.sa2 = SetAbstraction(npoint=128, radius=0.4, nsample=64, in_channel=128 + 3, mlp=[128, 128, 256])

self.sa3 = SetAbstraction(npoint=None, radius=None, nsample=None, in_channel=256 + 3, mlp=[256, 256, 512])

# FP模块

self.fp3 = FeaturePropagation(256, [512, 256])

self.fp2 = FeaturePropagation(128, [256, 128])

self.fp1 = FeaturePropagation(128, [128, 128, 128])

# 分类头

self.conv1 = nn.Conv1d(128, 128, 1)

self.bn1 = nn.BatchNorm1d(128)

self.drop1 = nn.Dropout(0.5)

self.conv2 = nn.Conv1d(128, num_classes, 1)

def forward(self, xyz, points):

# Set Abstraction

l1_xyz, l1_points = self.sa1(xyz, points)

l2_xyz, l2_points = self.sa2(l1_xyz, l1_points)

l3_xyz, l3_points = self.sa3(l2_xyz, l2_points)

# Feature Propagation

l2_points = self.fp3(l2_xyz, l3_xyz, l2_points, l3_points)

l1_points = self.fp2(l1_xyz, l2_xyz, l1_points, l2_points)

l0_points = self.fp1(xyz, l1_xyz, points, l1_points)

# 分类

feat = self.drop1(F.relu(self.bn1(self.conv1(l0_points))))

feat = self.conv2(feat)

feat = feat.max(-1)[0]

return feat

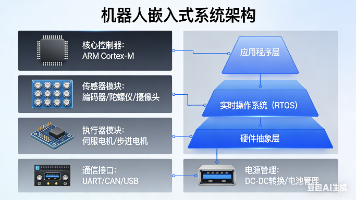

CANN优化方案

优化架构

cann-recipes-spatial-intelligence仓库提供了完整的优化方案:

空间智能模型

↓

模型转换层

(ONNX → TorchScript → OM)

↓

算子优化层

(融合算子、自定义算子)

↓

图优化层

(常量折叠、死代码消除)

↓

运行时优化层

(内存复用、流水线并行)

↓

昇腾硬件

算子优化实现

深度可分离卷积优化

"""

优化深度可分离卷积算子

"""

import torch

import torch_npu

class OptimizedDepthwiseSeparableConv(torch.nn.Module):

"""优化的深度可分离卷积"""

def __init__(self, in_channels, out_channels, kernel_size=3):

super().__init__()

# 深度卷积

self.depthwise = torch.nn.Conv2d(

in_channels, in_channels, kernel_size,

groups=in_channels, padding=kernel_size // 2

)

self.bn1 = torch.nn.BatchNorm2d(in_channels)

# 逐点卷积

self.pointwise = torch.nn.Conv2d(

in_channels, out_channels, 1

)

self.bn2 = torch.nn.BatchNorm2d(out_channels)

def forward(self, x):

# 使用NPU融合算子

x = torch_npu.npu_fused_depthwise_conv_bn_relu(

x,

self.depthwise.weight,

self.bn1.weight,

self.bn1.bias,

self.bn1.running_mean,

self.bn1.running_var,

1, # stride

[self.depthwise.padding[0], self.depthwise.padding[0]], # padding

1, # dilation

1, # groups

)

x = torch_npu.npu_fused_conv_bn_relu(

x,

self.pointwise.weight,

self.bn2.weight,

self.bn2.bias,

self.bn2.running_mean,

self.bn2.running_var

)

return x

3D注意力机制优化

"""

优化3D空间注意力机制

"""

class SpatialAttention3D(torch.nn.Module):

"""3D空间注意力模块"""

def __init__(self, in_channels):

super().__init__()

# 空间注意力分支

self.conv_spatial = torch.nn.Conv3d(

in_channels, 1, kernel_size=1

)

# 通道注意力分支

self.fc_channel = torch.nn.Sequential(

torch.nn.AdaptiveAvgPool3d(1),

torch.nn.Conv3d(in_channels, in_channels // 4, 1),

torch.nn.ReLU(inplace=True),

torch.nn.Conv3d(in_channels // 4, in_channels, 1),

torch.nn.Sigmoid()

)

def forward(self, x):

# 通道注意力

channel_attn = self.fc_channel(x)

x = x * channel_attn

# 空间注意力

spatial_attn = torch.sigmoid(self.conv_spatial(x))

x = x * spatial_attn

return x

点云特征提取优化

"""

优化点云特征提取

"""

class OptimizedPointFeatureExtractor(torch.nn.Module):

"""优化的点云特征提取器"""

def __init__(self, input_channels=3, feature_dim=128):

super().__init__()

# 使用融合的MLP

self.mlp1 = torch.nn.Sequential(

torch.nn.Conv2d(input_channels, 64, 1),

torch.nn.BatchNorm2d(64),

torch.nn.ReLU()

)

self.mlp2 = torch.nn.Sequential(

torch.nn.Conv2d(64, 128, 1),

torch.nn.BatchNorm2d(128),

torch.nn.ReLU()

)

def forward(self, points):

# points: [B, N, C]

batch_size, num_points, _ = points.shape

# 转换为[B, C, N, 1]便于卷积

x = points.transpose(1, 2).unsqueeze(-1)

# 使用NPU优化的卷积

x = torch_npu.npu_fused_conv_bn_relu(

x,

self.mlp1[0].weight,

self.mlp1[1].weight,

self.mlp1[1].bias,

self.mlp1[1].running_mean,

self.mlp1[1].running_var

)

x = torch_npu.npu_fused_conv_bn_relu(

x,

self.mlp2[0].weight,

self.mlp2[1].weight,

self.mlp2[1].bias,

self.mlp2[1].running_mean,

self.mlp2[1].running_var

)

# 最大池化

x = x.max(dim=2)[0].squeeze(-1)

return x

模型部署实践

深度估计模型部署

"""

深度估计模型的端到端部署

"""

import torch

import torch_npu

from PIL import Image

import numpy as np

class DepthEstimationDeployment:

"""深度估计模型部署类"""

def __init__(self, model_path="midas.om"):

# 初始化NPU

torch_npu.npu.set_device("npu:0")

# 加载模型

self.model = self._load_model(model_path)

self.model.eval()

# 初始化预处理器

self.preprocessor = DepthPreprocessor()

# 初始化后处理器

self.postprocessor = DepthPostprocessor()

def _load_model(self, model_path):

"""加载OM模型"""

model = torch.jit.load(model_path)

return model.to("npu:0")

def infer(self, image_path):

"""执行深度估计推理"""

# 1. 图像预处理

image = Image.open(image_path)

image_tensor = self.preprocessor.process(image)

image_tensor = image_tensor.to("npu:0")

# 2. 执行推理

with torch.no_grad():

with torch_npu.npu FORMAT("NHWC"):

with torch.cuda.amp.autocast():

depth_map = self.model(image_tensor)

# 3. 深度图后处理

depth_map = self.postprocessor.process(depth_map)

return depth_map

class DepthPreprocessor:

"""深度估计图像预处理器"""

def __init__(self, target_size=(384, 384)):

self.target_size = target_size

def process(self, image):

# 调整大小

image = image.resize(self.target_size, Image.BILINEAR)

# 转换为tensor

image = np.array(image).astype(np.float32) / 255.0

image = torch.from_numpy(image).permute(2, 0, 1).unsqueeze(0)

return image

class DepthPostprocessor:

"""深度估计结果后处理器"""

def __init__(self, min_depth=0.1, max_depth=10.0):

self.min_depth = min_depth

self.max_depth = max_depth

def process(self, depth_map):

# 转换到CPU

depth_map = depth_map.cpu().numpy().squeeze()

# 归一化到有效范围

depth_map = np.clip(depth_map, self.min_depth, self.max_depth)

# 可选:应用中值滤波减少噪声

# from scipy.ndimage import median_filter

# depth_map = median_filter(depth_map, size=5)

return depth_map

点云分割模型部署

"""

点云语义分割模型部署

"""

class PointCloudSegmentationDeployment:

"""点云语义分割部署类"""

def __init__(self, model_path="pointnetpp.om", num_classes=40):

torch_npu.npu.set_device("npu:0")

self.model = self._load_model(model_path)

self.model.eval()

self.num_classes = num_classes

self.preprocessor = PointCloudPreprocessor(num_points=2048)

def _load_model(self, model_path):

model = torch.jit.load(model_path)

return model.to("npu:0")

def infer(self, points):

"""

points: numpy array [N, 3] 或 [N, 6] (xyz + rgb)

"""

# 预处理

points_tensor = self.preprocessor.process(points)

points_tensor = points_tensor.to("npu:0")

# 推理

with torch.no_grad():

with torch.cuda.amp.autocast():

predictions = self.model(points_tensor)

# 后处理

labels = predictions.argmax(dim=-1).cpu().numpy()

return labels

class PointCloudPreprocessor:

"""点云预处理器"""

def __init__(self, num_points=2048):

self.num_points = num_points

def process(self, points):

"""

points: numpy array [N, C]

返回: torch tensor [1, num_points, C]

"""

points = torch.from_numpy(points).float()

# 采样或填充到固定数量

if points.shape[0] > self.num_points:

# 随机采样

indices = torch.randperm(points.shape[0])[:self.num_points]

points = points[indices]

elif points.shape[0] < self.num_points:

# 重复填充

repeat_times = (self.num_points // points.shape[0]) + 1

points = points.repeat(repeat_times, 1)[:self.num_points]

# 归一化

points[:, :3] = self.normalize_xyz(points[:, :3])

# 添加batch维度

points = points.unsqueeze(0)

return points

@staticmethod

def normalize_xyz(xyz):

"""归一化xyz坐标"""

centroid = xyz.mean(dim=0, keepdim=True)

xyz = xyz - centroid

max_distance = torch.sqrt((xyz ** 2).sum(dim=1)).max()

xyz = xyz / max_distance

return xyz

3D目标检测部署

"""

3D目标检测模型部署(以VoteNet为例)

"""

class Object3DDetectionDeployment:

"""3D目标检测部署类"""

def __init__(self, model_path="votenet.om"):

torch_npu.npu.set_device("npu:0")

self.model = self._load_model(model_path)

self.model.eval()

self.preprocessor = PointCloudPreprocessor(num_points=40000)

self.postprocessor = DetectionPostprocessor(

score_threshold=0.5,

nms_threshold=0.25

)

def infer(self, points):

"""

执行3D目标检测推理

返回:

detections: 检测结果列表

每个检测结果包含:

- class_id: 类别ID

- score: 置信度

- bbox_3d: 3D边界框 (center_x, center_y, center_z,

size_x, size_y, size_z,

heading)

"""

# 预处理

points_tensor = self.preprocessor.process(points)

points_tensor = points_tensor.to("npu:0")

# 推理

with torch.no_grad():

with torch.cuda.amp.autocast():

predictions = self.model(points_tensor)

# 后处理

detections = self.postprocessor.process(predictions)

return detections

class DetectionPostprocessor:

"""检测结果后处理器"""

def __init__(self, score_threshold=0.5, nms_threshold=0.25):

self.score_threshold = score_threshold

self.nms_threshold = nms_threshold

def process(self, predictions):

"""

处理模型预测输出

predictions:

- vote_xyz: [B, N, 3]

- vote_scores: [B, N, num_classes]

- bbox_preds: [B, N, num_classes, 3+4+1]

"""

vote_xyz = predictions['vote_xyz'][0].cpu().numpy()

vote_scores = predictions['vote_scores'][0].cpu().numpy()

bbox_preds = predictions['bbox_preds'][0].cpu().numpy()

detections = []

for i in range(vote_xyz.shape[0]):

max_score = vote_scores[i].max()

if max_score < self.score_threshold:

continue

class_id = vote_scores[i].argmax()

bbox = bbox_preds[i, class_id]

# 解析3D边界框

center = bbox[:3]

size = bbox[3:6]

heading = bbox[6]

detections.append({

'class_id': int(class_id),

'score': float(max_score),

'bbox_3d': {

'center': center.tolist(),

'size': size.tolist(),

'heading': float(heading)

}

})

# NMS去重

detections = self.nms(detections)

return detections

def nms(self, detections):

"""3D NMS"""

# 按分数排序

detections.sort(key=lambda x: x['score'], reverse=True)

keep = []

while detections:

# 保留最高分的检测

keep.append(detections[0])

# 移除与当前检测重叠高的检测

detections = [

d for d in detections[1:]

if self.compute_iou_3d(d, detections[0]) < self.nms_threshold

]

return keep

@staticmethod

def compute_iou_3d(det1, det2):

"""计算两个3D边界框的IoU"""

# 简化版本,实际应该使用3D IoU

box1 = det1['bbox_3d']

box2 = det2['bbox_3d']

# 计算中心距离

center1 = np.array(box1['center'])

center2 = np.array(box2['center'])

distance = np.linalg.norm(center1 - center2)

# 简单的距离阈值判断

size1 = np.array(box1['size'])

max_size1 = np.max(size1)

return 1.0 if distance < max_size1 * 0.5 else 0.0

性能优化技巧

1. 输入数据优化

"""

输入数据优化:批量处理和预加载

"""

import threading

import queue

class DataPipeline:

"""数据预加载管道"""

def __init__(self, dataset, batch_size=4, num_workers=4):

self.dataset = dataset

self.batch_size = batch_size

self.num_workers = num_workers

self.queue = queue.Queue(maxsize=16)

self.workers = []

self.running = False

def start(self):

"""启动数据加载管道"""

self.running = True

for worker_id in range(self.num_workers):

worker = threading.Thread(

target=self._worker_loop,

args=(worker_id,)

)

worker.start()

self.workers.append(worker)

def stop(self):

"""停止数据加载"""

self.running = False

for worker in self.workers:

worker.join()

def _worker_loop(self, worker_id):

"""工作线程循环"""

while self.running:

try:

# 获取数据索引

indices = self.dataset.get_batch_indices(

self.batch_size, worker_id

)

# 加载和预处理数据

batch = []

for idx in indices:

data = self.dataset.load_data(idx)

processed = self.dataset.preprocess(data)

batch.append(processed)

# 合并batch

batch_tensor = torch.stack(batch, dim=0)

# 放入队列

self.queue.put(batch_tensor)

except Exception as e:

print(f"Worker {worker_id} error: {e}")

def get_batch(self):

"""获取一个batch数据"""

return self.queue.get()

2. 混合精度推理

"""

混合精度推理配置

"""

class MixedPrecisionInference:

"""混合精度推理包装器"""

def __init__(self, model, amp_level='O2'):

self.model = model

self.amp_level = amp_level

# 配置混合精度

torch_npu.npu.set_amp_level(self.amp_level)

def infer(self, x):

"""使用混合精度执行推理"""

with torch.cuda.amp.autocast():

output = self.model(x)

return output

3. 动态Shape优化

"""

动态Shape优化策略

"""

class DynamicShapeOptimizer:

"""动态Shape优化器"""

def __init__(self, model):

self.model = model

self.compiled_models = {}

def infer(self, x):

"""根据输入shape选择优化后的模型"""

shape_key = tuple(x.shape)

# 检查是否有针对该shape优化的模型

if shape_key not in self.compiled_models:

# 为当前shape编译优化模型

self.compiled_models[shape_key] = self._compile_for_shape(

x.shape

)

# 使用优化后的模型执行

model = self.compiled_models[shape_key]

with torch.no_grad():

output = model(x)

return output

def _compile_for_shape(self, shape):

"""为特定shape编译优化模型"""

# 使用TorchScript优化

example_input = torch.randn(*shape).to("npu:0")

traced_model = torch.jit.trace(self.model, example_input)

traced_model = torch.jit.freeze(traced_model)

return traced_model.to("npu:0")

实际应用案例

自动驾驶场景理解

"""

自动驾驶场景理解应用

"""

class AutonomousDrivingSceneUnderstanding:

"""自动驾驶场景理解系统"""

def __init__(self):

# 深度估计

self.depth_estimator = DepthEstimationDeployment()

# 3D目标检测

self.object_detector = Object3DDetectionDeployment()

# 语义分割

self.semantic_segmenter = SemanticSegmentationDeployment()

def understand_scene(self, camera_image):

"""理解驾驶场景"""

# 1. 深度估计

depth_map = self.depth_estimator.infer(camera_image)

# 2. 3D目标检测

objects_3d = self.object_detector.infer_from_image(

camera_image, depth_map

)

# 3. 语义分割

segmentation = self.semantic_segmenter.infer(camera_image)

# 4. 融合理解

scene_understanding = {

'depth_map': depth_map,

'objects_3d': objects_3d,

'segmentation': segmentation,

'drivable_area': self.extract_drivable_area(

depth_map, segmentation

),

'collision_risk': self.assess_collision_risk(

objects_3d, depth_map

)

}

return scene_understanding

def extract_drivable_area(self, depth_map, segmentation):

"""提取可驾驶区域"""

# 使用语义分割识别道路区域

road_mask = segmentation == 7 # 道路类别

# 结合深度信息估计道路几何

road_points = self.depth_to_3d_points(depth_map, road_mask)

# 平面拟合得到可驾驶区域

drivable_area = self.fit_road_plane(road_points)

return drivable_area

def assess_collision_risk(self, objects_3d, depth_map):

"""评估碰撞风险"""

collision_risks = []

for obj in objects_3d:

# 计算物体到自车的距离

distance = obj['bbox_3d']['center'][2] # Z方向距离

# 评估风险等级

if distance < 5:

risk = 'HIGH'

elif distance < 15:

risk = 'MEDIUM'

else:

risk = 'LOW'

collision_risks.append({

'object': obj,

'distance': distance,

'risk_level': risk

})

return collision_risks

室内机器人导航

"""

室内机器人导航应用

"""

class IndoorRobotNavigation:

"""室内机器人导航系统"""

def __init__(self):

# 3D场景重建

self.scene_reconstructor = SceneReconstructor()

# 语义分割

self.semantic_segmenter = SemanticSegmentationDeployment()

# 目标检测

self.object_detector = Object3DDetectionDeployment()

# 路径规划

self.path_planner = PathPlanner()

def navigate_to_goal(self, current_view, goal_description):

"""导航到目标位置"""

# 1. 场景重建

scene_map = self.scene_reconstructor.update_map(current_view)

# 2. 语义理解

semantics = self.semantic_segmenter.infer(current_view)

# 3. 目标检测

objects = self.object_detector.infer_from_view(current_view)

# 4. 定位目标

goal_position = self.locate_goal(

goal_description, objects, semantics

)

# 5. 路径规划

path = self.path_planner.plan_path(

start=self.get_robot_position(),

goal=goal_position,

map=scene_map,

obstacles=objects

)

return path

def locate_goal(self, description, objects, semantics):

"""根据描述定位目标位置"""

# 简化实现:匹配关键词

keywords = description.lower().split()

for obj in objects:

obj_name = self.get_object_class_name(obj['class_id'])

if any(kw in obj_name for kw in keywords):

return obj['bbox_3d']['center']

return None

测试与验证

精度验证

"""

精度验证脚本

"""

def evaluate_depth_estimation(model, test_dataset):

"""评估深度估计模型精度"""

metrics = {

'abs_rel': [],

'sq_rel': [],

'rmse': [],

'rmse_log': [],

'a1': [],

'a2': [],

'a3': []

}

for data in test_dataset:

image = data['image']

gt_depth = data['depth']

# 推理

pred_depth = model.infer(image)

# 计算误差

valid_mask = (gt_depth > 0) & (gt_depth < 80)

abs_rel = np.mean(np.abs(gt_depth[valid_mask] - pred_depth[valid_mask]) / gt_depth[valid_mask])

sq_rel = np.mean(((gt_depth[valid_mask] - pred_depth[valid_mask]) ** 2) / gt_depth[valid_mask])

rmse = np.sqrt(np.mean((gt_depth[valid_mask] - pred_depth[valid_mask]) ** 2))

rmse_log = np.sqrt(np.mean((np.log(gt_depth[valid_mask]) - np.log(pred_depth[valid_mask])) ** 2))

# 准确率阈值

max_ratio = np.maximum(gt_depth[valid_mask] / pred_depth[valid_mask],

pred_depth[valid_mask] / gt_depth[valid_mask])

a1 = (max_ratio < 1.25).mean()

a2 = (max_ratio < 1.25 ** 2).mean()

a3 = (max_ratio < 1.25 ** 3).mean()

metrics['abs_rel'].append(abs_rel)

metrics['sq_rel'].append(sq_rel)

metrics['rmse'].append(rmse)

metrics['rmse_log'].append(rmse_log)

metrics['a1'].append(a1)

metrics['a2'].append(a2)

metrics['a3'].append(a3)

# 计算平均指标

for key in metrics:

metrics[key] = np.mean(metrics[key])

return metrics

性能基准测试

"""

性能基准测试

"""

import time

def benchmark_spatial_intelligence_model(model, test_data, num_iterations=100):

"""基准测试空间智能模型性能"""

latencies = []

throughputs = []

for _ in range(num_iterations):

# 随机选择测试数据

data = test_data.get_random_sample()

# 预热

for _ in range(5):

_ = model.infer(data)

# 测试

start = time.time()

output = model.infer(data)

torch_npu.npu.synchronize()

end = time.time()

latency = end - start

latencies.append(latency)

throughputs.append(1 / latency)

# 统计

results = {

'avg_latency_ms': np.mean(latencies) * 1000,

'p50_latency_ms': np.percentile(latencies, 50) * 1000,

'p95_latency_ms': np.percentile(latencies, 95) * 1000,

'p99_latency_ms': np.percentile(latencies, 99) * 1000,

'avg_throughput_fps': np.mean(throughputs)

}

return results

总结

cann-recipes-spatial-intelligence仓库为空间智能AI模型在昇腾平台上的部署提供了完整的优化方案。通过算子优化、模型转换、推理优化等技术手段,实现了深度估计、3D重建、点云处理等空间智能模型的高效部署。

关键优化技术包括:

- 深度可分离卷积等算子的融合优化

- 3D注意力机制的高效实现

- 混合精度推理平衡精度与性能

- 动态Shape优化适应不同输入

- 流水线并行提升系统吞吐

随着空间智能应用场景的不断扩展,CANN社区将持续提供针对新模型和新场景的优化方案,为空间智能技术的实际应用提供强大的算力支持。

参考资料

- CANN组织链接:https://atomgit.com/cann

- cann-recipes-spatial-intelligence仓库链接:https://atomgit.com/cann/cann-recipes-spatial-intelligence

- 深度解读昇腾CANN动态Shape图调度加速技术

- 昇腾CANN 7.0 黑科技:大模型推理部署技术解密

DAMO开发者矩阵,由阿里巴巴达摩院和中国互联网协会联合发起,致力于探讨最前沿的技术趋势与应用成果,搭建高质量的交流与分享平台,推动技术创新与产业应用链接,围绕“人工智能与新型计算”构建开放共享的开发者生态。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)