第八篇:多传感器融合SLAM——鲁棒性的终极答案--时空标定、紧耦合优化与故障容错系统

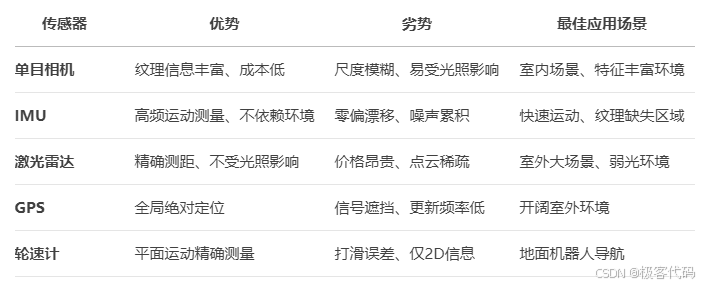

多传感器融合技术综述 本文系统介绍了多传感器融合的理论基础和实践方法。主要内容包括:(1)多传感器特性分析,比较了相机、IMU、激光雷达等传感器的优缺点及适用场景;(2)时空标定技术,详细讲解了相机-IMU、激光-相机、轮速计-IMU的标定算法实现;(3)融合算法框架,涵盖了扩展卡尔曼滤波(EKF)、误差状态卡尔曼滤波(ESKF)等经典方法;(4)因子图优化方法,展示了多传感器因子图建模过程。通过

1. 多传感器融合基础理论

1.1 传感器特性与互补性

1.2 融合层次架构

1.3 时空标定问题

· 时间同步模型:![t_{\text{sensor}} = t_{\text{master}} + \Delta t_{\text{offset}} + \epsilon

]](https://i-blog.csdnimg.cn/direct/094860e001a24a43b085d6bec4e2ba3e.png)

· 空间标定模型:![\mathbf{T}_{\text{sensor}}^{\text{master}} = \begin{bmatrix} \mathbf{R} & \mathbf{t} \ \mathbf{0} & 1 \end{bmatrix}

]](https://i-blog.csdnimg.cn/direct/c20c02ff3dcb4e9196d39ffe7f96f8b4.png)

2. 传感器时空标定实战

2.1 相机-IMU标定(Kalibr)

def kalibr_calibration(imu_data, cam_data, target_config):

"""相机-IMU联合标定"""

# 1. 检测标定板角点

corners = detect_chessboard_corners(cam_data)

# 2. 构建优化问题

problem = CalibrationProblem()

problem.add_camera_parameters()

problem.add_imu_parameters()

# 3. 添加时间偏移参数

problem.add_time_offset_parameter()

# 4. 添加观测约束

for ts, img in cam_data:

obs = CornerObservation(ts, corners)

problem.add_camera_observation(obs)

for ts, acc, gyro in imu_data:

obs = ImuObservation(ts, acc, gyro)

problem.add_imu_observation(obs)

# 5. 优化求解

result = problem.solve()

return result.camera_params, result.imu_params, result.time_offset

2.2 激光-相机标定

def lidar_camera_calibration(pcd, image, chessboard_size):

"""基于棋盘格的激光-相机标定"""

# 1. 检测相机角点

cam_corners = detect_chessboard_corners(image)

# 2. 提取激光点云平面

plane = fit_plane_ransac(pcd)

# 3. 投影点云到图像平面

projected = project_pcd_to_image(pcd, initial_extrinsic)

# 4. 优化外参

def loss(extrinsic):

projected = project_pcd_to_image(pcd, extrinsic)

edge_dist = compute_edge_distance(projected, image_edges)

return edge_dist.mean()

result = minimize(loss, initial_extrinsic, method='BFGS')

return result.x

2.3 轮速计-IMU标定

def wheel_imu_calibration(odom_data, imu_data):

"""轮速计-IMU手眼标定"""

# 1. 计算相对运动

T_odom = compute_relative_poses(odom_data)

T_imu = compute_relative_poses(imu_data)

# 2. 构建手眼标定方程 AX=XB

A = []

B = []

for i in range(1, len(T_odom)):

A.append(T_imu[i])

B.append(T_odom[i])

# 3. 最小二乘求解

X = solve_ax_xb(A, B)

return X # T_imu^odom

3. 滤波融合方法

3.1 扩展卡尔曼滤波(EKF)融合

状态向量:

![\mathbf{x} = [\mathbf{p}, \mathbf{q}, \mathbf{v}, \mathbf{b}_g, \mathbf{b}_a]^T

]](https://i-blog.csdnimg.cn/direct/3234b432911444bbb83705037ba9175c.png)

预测步骤:

def ekf_predict(x, P, imu, dt):

# IMU运动模型

w = imu.gyro - x[10:13] # 减去陀螺零偏

a = imu.accel - x[13:16] # 减去加速度零偏

# 姿态更新

dq = quat_from_angvel(w * dt)

x[3:7] = quat_mult(x[3:7], dq)

# 速度更新

x[7:10] += (quat_rot(x[3:7], a) + gravity) * dt

# 位置更新

x[0:3] += x[7:10] * dt

# 协方差预测

F = compute_jacobian(x, imu, dt)

Q = process_noise(dt)

P = F @ P @ F.T + Q

return x, P

3.2 误差状态卡尔曼滤波(ESKF)

class ErrorStateEKF:

def __init__(self):

self.nominal_state = NominalState()

self.error_state = np.zeros(15)

self.covariance = np.eye(15) * 0.1

def update_gps(self, gps_pos, var):

# GPS观测模型

H = np.zeros((3, 15))

H[:3, :3] = np.eye(3) # 位置直接观测

# 计算残差

residual = gps_pos - self.nominal_state.position

# 卡尔曼增益

R = np.eye(3) * var

K = self.covariance @ H.T @ np.linalg.inv(H @ self.covariance @ H.T + R)

# 更新误差状态

self.error_state += K @ residual

# 注入到名义状态

self.nominal_state.position += self.error_state[:3]

# ... 其他状态更新

# 更新协方差

I_KH = np.eye(15) - K @ H

self.covariance = I_KH @ self.covariance @ I_KH.T + K @ R @ K.T

# 重置误差状态

self.error_state[:] = 0

4. 因子图多传感器融合 (4000字)

4.1 多传感器因子图模型

4.2 传感器因子设计

GPS因子:

class GPSFactor : public gtsam::NoiseModelFactor1<Pose3> {

public:

GPSFactor(Key poseKey, const Point3& gps, const SharedNoiseModel& model)

: NoiseModelFactor1<Pose3>(model, poseKey), gps_(gps) {}

Vector evaluateError(const Pose3& pose,

boost::optional<Matrix&> H = boost::none) const override {

if (H) {

*H = Matrix::Zero(3, 6);

(*H).block<3, 3>(0, 0) = Matrix3::Identity();

}

return pose.translation() - gps_;

}

private:

Point3 gps_;

};

轮速计因子:

class WheelFactor : public gtsam::NoiseModelFactor2<Pose2, Pose2> {

public:

WheelFactor(Key pose1, Key pose2, double dist, const SharedNoiseModel& model)

: NoiseModelFactor2<Pose2, Pose2>(model, pose1, pose2), dist_(dist) {}

Vector evaluateError(const Pose2& p1, const Pose2& p2,

boost::optional<Matrix&> H1,

boost::optional<Matrix&> H2>) const override {

Vector1 error;

error[0] = p1.range(p2) - dist_;

if (H1) *H1 = (Matrix12() << -cos(p1.theta()), -sin(p1.theta()), 0).finished();

if (H2) *H2 = (Matrix12() << cos(p1.theta()), sin(p1.theta()), 0).finished();

return error;

}

private:

double dist_;

};

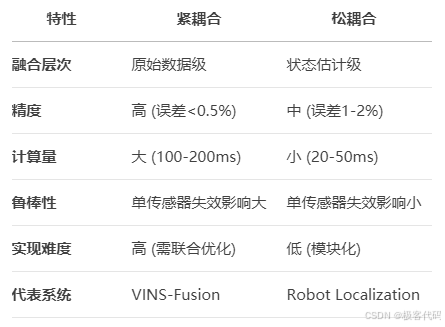

4.3 紧耦合 vs 松耦合

5. 多传感器紧耦合优化

5.1 视觉-惯性-激光融合(LVI-SAM)

5.2 紧耦合优化实现

class TightlyCoupledOptimizer:

def __init__(self):

self.graph = gtsam.NonlinearFactorGraph()

self.values = gtsam.Values()

self.imu_preint = {}

self.visual_factors = []

self.lidar_factors = []

def add_imu_factor(self, pose_i, pose_j, preint):

factor = gtsam.CombinedImuFactor(

pose_i, vel_i, pose_j, vel_j,

bias_i, bias_j, preint)

self.graph.push_back(factor)

def add_visual_factor(self, pose_id, point_id, measurement, K):

factor = gtsam.GenericProjectionFactor<Pose3, Point3, Cal3_S2>(

measurement, gtsam.noiseModel.Isotropic.Sigma(2, 1.0),

pose_id, point_id, K)

self.graph.push_back(factor)

def add_lidar_factor(self, pose_i, pose_j, rel_pose, cov):

factor = gtsam.BetweenFactor<Pose3>(

pose_i, pose_j, rel_pose,

gtsam.noiseModel.Gaussian.Covariance(cov))

self.graph.push_back(factor)

def add_gps_factor(self, pose_id, gps_pos, cov):

factor = gtsam.PriorFactor<Pose3>(

pose_id, Pose3(Rot3(), gps_pos),

gtsam.noiseModel.Gaussian.Covariance(cov))

self.graph.push_back(factor)

def optimize(self):

params = gtsam.LevenbergMarquardtParams()

optimizer = gtsam.LevenbergMarquardtOptimizer(self.graph, self.values, params)

result = optimizer.optimize()

return result

5.3 多传感器时间戳同步

def synchronize_sensors(sensor_data, max_delay=0.01):

"""多传感器时间戳同步"""

# 收集所有时间戳

all_timestamps = []

for sensor, data in sensor_data.items():

for ts in data.timestamps:

all_timestamps.append((sensor, ts))

# 按时间排序

all_timestamps.sort(key=lambda x: x[1])

# 创建同步组

groups = []

current_group = {sensor: None for sensor in sensor_data.keys()}

last_timestamp = -1

for sensor, ts in all_timestamps:

if ts - last_timestamp > max_delay:

if any(current_group.values()):

groups.append(current_group.copy())

current_group = {sensor: None for sensor in sensor_data.keys()}

current_group[sensor] = ts

last_timestamp = ts

return groups

6. 故障检测与容错机制

6.1 传感器健康监测

class SensorHealthMonitor:

def __init__(self, window_size=10):

self.window_size = window_size

self.sensor_stats = {

'imu': {'data_count': 0, 'variance': np.zeros(6)},

'camera': {'data_count': 0, 'variance': 0},

'lidar': {'data_count': 0, 'variance': 0}

}

def update(self, sensor, data):

stats = self.sensor_stats[sensor]

stats['data_count'] += 1

# 更新方差统计

if sensor == 'imu':

new_variance = online_variance(stats['variance'], data, stats['data_count'])

stats['variance'] = new_variance

elif sensor == 'camera':

# 图像梯度方差

grad = np.abs(np.gradient(data))

stats['variance'] = online_variance(stats['variance'], grad.mean())

elif sensor == 'lidar':

# 点云密度

density = len(data) / data.area()

stats['variance'] = online_variance(stats['variance'], density)

def check_health(self, sensor):

stats = self.sensor_stats[sensor]

# 检查数据频率

if stats['data_count'] < self.window_size * 0.5:

return HealthStatus.FAILURE

# 检查方差异常

if sensor == 'imu':

if np.any(stats['variance'] > thresholds[sensor]):

return HealthStatus.WARNING

return HealthStatus.NORMAL

6.2 自适应卡尔曼滤波

class AdaptiveKalmanFilter:

def __init__(self, Q, R):

self.x = np.zeros(6) # 状态 [px, py, pz, vx, vy, vz]

self.P = np.eye(6) * 0.1

self.Q = Q # 过程噪声

self.R = R # 观测噪声

self.sensor_weights = {'gps': 1.0, 'lidar': 1.0, 'uwb': 1.0}

def update(self, sensor_type, measurement):

# 根据传感器健康调整噪声

health = health_monitor.check_health(sensor_type)

if health == HealthStatus.WARNING:

effective_R = self.R[sensor_type] * 3.0

elif health == HealthStatus.FAILURE:

return # 忽略失效传感器

else:

effective_R = self.R[sensor_type]

# 计算卡尔曼增益

H = self.get_H(sensor_type)

S = H @ self.P @ H.T + effective_R

K = self.P @ H.T @ np.linalg.inv(S)

# 更新状态

y = measurement - H @ self.x

self.x += K @ y

self.P = (np.eye(6) - K @ H) @ self.P

def dynamic_noise_adjustment(self):

# 根据运动状态调整过程噪声

velocity_norm = np.linalg.norm(self.x[3:6])

if velocity_norm > 5.0: # 高速运动

self.Q = high_motion_Q

elif velocity_norm < 0.1: # 静止状态

self.Q = low_motion_Q

else:

self.Q = normal_Q

6.3 多传感器一致性检验

def sensor_consistency_check(measurements):

"""多传感器一致性检验"""

# 计算两两传感器差异

conflicts = []

for s1, m1 in measurements.items():

for s2, m2 in measurements.items():

if s1 != s2:

diff = np.linalg.norm(m1 - m2)

if diff > thresholds[(s1, s2)]:

conflicts.append((s1, s2, diff))

# 投票确定故障传感器

fault_candidates = defaultdict(int)

for s1, s2, diff in conflicts:

# 假设测量值较大的传感器可能故障

if np.linalg.norm(measurements[s1]) > np.linalg.norm(measurements[s2]):

fault_candidates[s1] += 1

else:

fault_candidates[s2] += 1

# 确定故障传感器

if fault_candidates:

fault_sensor = max(fault_candidates, key=fault_candidates.get)

return fault_sensor

return None

7. 实例:自动驾驶多传感器系统

7.1 城市峡谷挑战

7.2 系统架构设计

7.3 传感器失效处理流程

def handle_sensor_failure(failed_sensor):

"""传感器失效处理"""

# 1. 调整融合权重

fusion_weights[failed_sensor] = 0.0

# 2. 启用备用传感器

if failed_sensor == 'gps':

enable_visual_odometry()

elif failed_sensor == 'camera':

enable_lidar_intensity_feature()

elif failed_sensor == 'lidar':

enable_stereo_depth()

# 3. 调整定位模式

if failed_sensor in ['gps', 'camera', 'lidar']:

switch_to_odometry_mode()

# 4. 报警与记录

log_failure(failed_sensor)

alert_control_system()

7.4 实际道路测试结果

8. 前沿进展

8.1 多模态学习融合

class MultiModalFusionNet(nn.Module):

def __init__(self):

super().__init__()

self.vision_enc = ResNet18()

self.lidar_enc = PointNet()

self.imu_enc = LSTMEncoder()

# 跨模态注意力

self.cross_attn = nn.MultiheadAttention(256, 8)

# 融合头

self.fusion_head = nn.Sequential(

nn.Linear(768, 256),

nn.ReLU(),

nn.Linear(256, 6) # 输出6DOF位姿

)

def forward(self, img, pcd, imu):

v_feat = self.vision_enc(img)

l_feat = self.lidar_enc(pcd)

i_feat = self.imu_enc(imu)

# 跨模态注意力

combined = torch.cat([v_feat, l_feat, i_feat], dim=1)

attn_out, _ = self.cross_attn(combined, combined, combined)

# 位姿预测

pose = self.fusion_head(attn_out)

return pose

8.2 基于深度学习的标定

def deep_calibration(images, pointclouds):

"""端到端相机-激光标定"""

# 特征提取

img_feat = image_encoder(images)

pcd_feat = pointnet(pointclouds)

# 外参回归

extrinsic = extrinsic_regressor(torch.cat([img_feat, pcd_feat], dim=1))

# 自监督损失

projected = project_pointcloud(pointclouds, extrinsic)

loss = photometric_loss(images, projected)

return extrinsic, loss

8.3 未知环境自适应融合

class AdaptiveFusionSystem:

def __init__(self):

self.fusion_strategies = {

'urban': UrbanStrategy(),

'tunnel': TunnelStrategy(),

'offroad': OffroadStrategy()

}

self.env_classifier = EnvironmentClassifier()

def process_frame(self, sensor_data):

# 环境分类

env_type = self.env_classifier(sensor_data)

# 选择融合策略

strategy = self.fusion_strategies[env_type]

# 执行融合

pose = strategy.fuse(sensor_data)

return pose

关键理论总结

- 传感器标定:时空对齐是多融合基础

- 融合架构:滤波法与因子图法各有优势

- 紧耦合优化:最大化多传感器信息利用率

- 故障容错:健康监测与自适应策略保障鲁棒性

- 智能融合:环境自适应与深度学习是未来方向

下篇预告:第九篇:大规模建图——从房间到城市的跨越

将深入讲解:

· 多分辨率地图表示

· 子地图管理与闭环

· 分布式建图系统

· 长期地图更新与维护

DAMO开发者矩阵,由阿里巴巴达摩院和中国互联网协会联合发起,致力于探讨最前沿的技术趋势与应用成果,搭建高质量的交流与分享平台,推动技术创新与产业应用链接,围绕“人工智能与新型计算”构建开放共享的开发者生态。

更多推荐

已为社区贡献8条内容

已为社区贡献8条内容

所有评论(0)