告别繁琐: 爬虫新宠 crawl4ai,数行代码搞定数据采集!-- 附多种场景完整代码示例!

在数据驱动的时代,网络爬虫已经成为我们获取信息的重要工具。但传统的爬虫框架往往需要编写大量的代码,让人偶尔让人恶心至极。的出现,让 Python 爬虫开发变得更加简单高效。它以其简洁的 API、强大的功能和灵活的配置选项,成为了爬虫开发的新宠。无论是简单的数据采集,还是复杂的网页交互,既然简单我也没有必要给大家介绍那么多,大家也不乐意看,我只简单说一下它的特点,咱们直接给出来可运行的代码!,它以其

引言

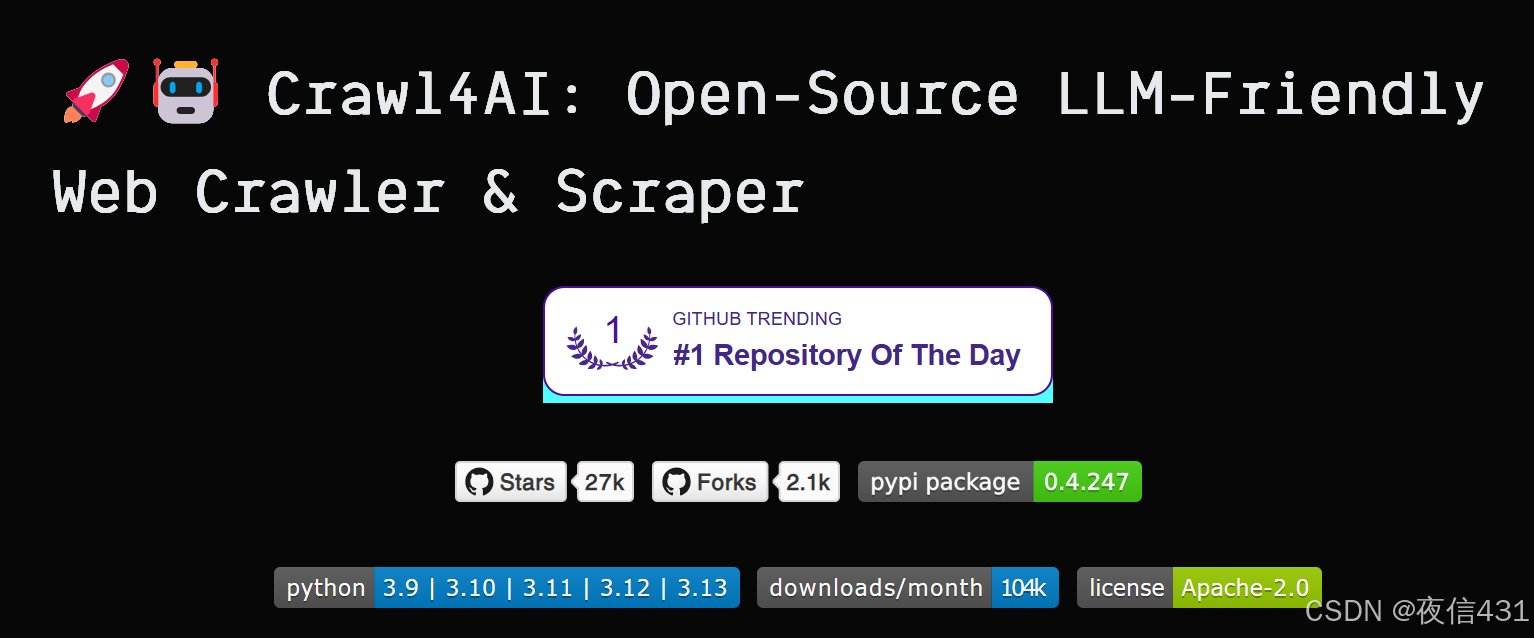

在数据驱动的时代,网络爬虫已经成为我们获取信息的重要工具。但传统的爬虫框架往往需要编写大量的代码,让人偶尔让人恶心至极。。。今天,我将为大家介绍一个全新的 Python 爬虫库:crawl4ai,它以其简洁的 API 和强大的功能,让爬虫开发变得轻松愉快。更重要的是,你将看到,它真的可以做到,一行代码就能开始爬取!

文末附有完整代码和更多示例哦!⭐

crawl4ai:让爬虫开发回归简单

既然简单我也没有必要给大家介绍那么多,大家也不乐意看,我只简单说一下它的特点,咱们直接给出来可运行的代码!代码说话!。

crawl4ai 的主要特点:

- 异步高效: 基于

asyncio构建,可以并发处理多个请求,大幅提高爬虫效率。 - 简洁易用: 提供高度抽象的 API,几行代码即可实现复杂爬虫逻辑。

- 多种数据提取方式: 支持 CSS 选择器、XPath、JSON 等多种数据提取方式。

- 强大的错误处理: 内置重试机制,自动处理网络错误和页面加载问题。

- 灵活的配置选项: 支持自定义请求头、代理、超时时间等参数。

- 开箱即用: 无需复杂配置,一行代码即可开始爬取。

安装 crawl4ai

在开始之前,请确保你已经安装了 crawl4ai 库。你可以使用 pip 进行安装:

pip install crawl4ai

初体验:几行代码爬取电影天堂列表

下面,我们用 crawl4ai 来爬取电影天堂的电影列表,让你体验一下它的简洁魅力。注意,以下代码可以直接运行!

import asyncio

import json

from crawl4ai import AsyncWebCrawler

from crawl4ai.extraction_strategy import JsonCssExtractionStrategy

from pprint import pprint as pp

async def extract_movies():

schema = {

"name": "DYTT Movies",

"baseSelector": ".co_content8 ul table",

"type": "list",

"fields": [

{

"name": "title",

"type": "text",

"selector": "a.ulink",

},

{

"name": "url",

"type": "attribute",

"selector": "a.ulink",

"attribute": "href",

},

{

"name": "date",

"type": "text",

"selector": "td:nth-child(2)",

}

],

}

extraction_strategy = JsonCssExtractionStrategy(schema, verbose=True)

async with AsyncWebCrawler(

verbose=True,

headers={

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Accept-Encoding': 'gzip, deflate',

'Connection': 'keep-alive',

'Cache-Control': 'max-age=0',

'Upgrade-Insecure-Requests': '1'

},

wait_time=5 # 增加页面加载等待时间

) as crawler:

all_movies = []

# 只爬取前2页的电影信息

for page in range(1, 3):

try:

url = f"https://www.dytt8.net/html/gndy/dyzz/list_23_{page}.html"

result = await crawler.arun(

url=url,

extraction_strategy=extraction_strategy,

bypass_cache=True,

page_timeout=30000 # 增加超时时间

)

if not result.success:

print(f"爬取第 {page} 页失败,跳过该页")

continue

movies = json.loads(result.extracted_content)

all_movies.extend(movies)

print(f"成功提取第 {page} 页的 {len(movies)} 部电影信息")

# 增加请求间隔

await asyncio.sleep(5)

except Exception as e:

print(f"爬取第 {page} 页时出错: {str(e)}")

continue

if not all_movies:

print("未能成功提取任何电影信息")

return []

# 保存结果到文件

with open("dytt_movies.json", "w", encoding="utf-8") as f:

json.dump(all_movies, f, ensure_ascii=False, indent=2)

pp(all_movies)

print(f"总共提取了 {len(all_movies)} 部电影信息")

return all_movies

if __name__ == "__main__":

asyncio.run(extract_movies())

代码解析:

JsonCssExtractionStrategy: 我们定义了一个数据提取策略,描述了要提取的字段以及对应的 CSS 选择器。AsyncWebCrawler: 创建了一个异步爬虫实例,并配置了 User-Agent、等待时间等参数。crawler.arun: 执行爬取任务,传入目标 URL 和提取策略。result.extracted_content: 获取爬取结果,并将其解析为 JSON 格式。

直接复制粘贴,运行试试! 你会发现,几秒钟后,终端就会输出爬取到的电影信息,并保存到 dytt_movies.json 文件中。是不是很神奇?

更深入的探索:模拟用户操作

除了简单的数据提取,crawl4ai 还支持模拟用户的操作,比如点击按钮、滚动页面、等待元素加载等。下面,我们以电影天堂的电影详情页为例,展示如何使用 crawl4ai 模拟用户浏览行为:

import asyncio

from crawl4ai import AsyncWebCrawler

async def view_movie_detail(movie_url: str):

"""

查看电影详细信息,包括剧情简介、演员表、下载地址等

"""

async with AsyncWebCrawler(

verbose=True,

javascript=True,

wait_time=10,

headers={

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'Accept-Language': 'zh-CN,zh;q=0.9'

}

) as crawler:

try:

print(f"\n正在加载电影详情页: {movie_url}")

# 访问电影详情页

result = await crawler.arun(

url=movie_url,

wait_for="css:#Zoom", # 等待内容区域加载

page_timeout=30000

)

if not result.success:

print("页面加载失败")

return

# 模拟查看剧情简介

await crawler.arun(

url=movie_url,

js_code="""

// 滚动到剧情简介部分

const plot = document.querySelector('#Zoom');

if (plot) plot.scrollIntoView({ behavior: 'smooth' });

""",

js_only=True

)

print("正在查看剧情简介...")

await asyncio.sleep(2)

# 模拟查看演员表

await crawler.arun(

url=movie_url,

js_code="""

// 滚动到演员表部分

const cast = document.querySelector('#Zoom table');

if (cast) cast.scrollIntoView({ behavior: 'smooth' });

""",

js_only=True

)

print("正在查看演员表...")

await asyncio.sleep(2)

# 模拟查看下载地址

await crawler.arun(

url=movie_url,

js_code="""

// 滚动到下载地址

const download = document.querySelector('#Zoom thunder_url_hash');

if (download) {

download.scrollIntoView({ behavior: 'smooth' });

// 高亮显示下载链接

download.style.backgroundColor = '#fff3cd';

}

""",

js_only=True

)

print("正在查看下载地址...")

await asyncio.sleep(2)

print("\n页面浏览完成!")

except Exception as e:

print(f"操作过程出错: {str(e)}")

if __name__ == "__main__":

# 示例电影详情页URL

movie_url = "https://dytt.dytt8.net/html/gndy/jddy/20241224/65713.html"

asyncio.run(view_movie_detail(movie_url))

代码解析:

javascript=True: 开启 JavaScript 支持,可以执行页面上的 JavaScript 代码。wait_for="css:#Zoom": 等待#Zoom元素加载完成后再执行后续操作。js_code: 执行自定义的 JavaScript 代码,用于滚动页面、高亮显示元素等操作。

其他场景应用

crawl4ai 的强大之处还在于其灵活的应用场景。下面列举了一些其他的应用场景,并附上简要的代码示例(详细代码请参考博文末尾的 GitCode 链接):

- 爬取 Bilibili 评论

import asyncio

import json

from crawl4ai import AsyncWebCrawler

from crawl4ai.extraction_strategy import JsonCssExtractionStrategy

from pprint import pprint as pp

async def extract_videos():

schema = {

"name": "Bilibili Hot Videos",

"baseSelector": ".rank-item",

"type": "list",

"fields": [

{

"name": "title",

"type": "text",

"selector": ".info a",

},

{

"name": "up_name",

"type": "text",

"selector": ".up-name",

},

{

"name": "play_info",

"type": "text",

"selector": ".detail-state",

},

{

"name": "score",

"type": "text",

"selector": ".pts div",

},

{

"name": "url",

"type": "attribute",

"selector": ".info a",

"attribute": "href",

}

],

}

extraction_strategy = JsonCssExtractionStrategy(schema, verbose=True)

async with AsyncWebCrawler(

verbose=True,

javascript=True,

wait_time=15,

headers={

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Accept-Encoding': 'gzip, deflate, br',

'Connection': 'keep-alive',

'Cache-Control': 'no-cache',

'Pragma': 'no-cache',

'Referer': 'https://www.bilibili.com'

}

) as crawler:

all_videos = []

try:

url = "https://www.bilibili.com/v/popular/rank/all"

result = await crawler.arun(

url=url,

extraction_strategy=extraction_strategy,

wait_for="css:.rank-list",

page_timeout=60000

)

if not result.success:

print("爬取失败")

return []

videos = json.loads(result.extracted_content)

all_videos.extend(videos)

print(f"成功提取 {len(videos)} 个视频信息")

except Exception as e:

print(f"爬取过程出错: {str(e)}")

return []

if not all_videos:

print("未能成功提取任何视频信息")

return []

with open("bilibili_videos.json", "w", encoding="utf-8") as f:

json.dump(all_videos, f, ensure_ascii=False, indent=2)

print(f"\n总共提取了 {len(all_videos)} 个视频信息")

return all_videos

if __name__ == "__main__":

asyncio.run(extract_videos())

- 爬取豆瓣图书 Top250

import asyncio

import json

from crawl4ai import AsyncWebCrawler

from crawl4ai.extraction_strategy import JsonCssExtractionStrategy

from pprint import pprint as pp

async def extract_books():

schema = {

"name": "Douban Book 250",

"baseSelector": "tr.item",

"type": "list",

"fields": [

{

"name": "title",

"type": "text",

"selector": ".pl2 > a",

},

{

"name": "url",

"type": "attribute",

"selector": ".pl2 > a",

"attribute": "href",

},

{

"name": "info",

"type": "text",

"selector": ".pl",

},

{

"name": "rate",

"type": "text",

"selector": ".rating_nums",

},

{

"name": "quote",

"type": "text",

"selector": "span.inq",

},

],

}

extraction_strategy = JsonCssExtractionStrategy(schema, verbose=True)

all_books = []

async with AsyncWebCrawler(verbose=True) as crawler:

for i in range(10):

result = await crawler.arun(

url = f"https://book.douban.com/top250?start={i * 25}",

extraction_strategy=extraction_strategy,

bypass_cache=True,

)

assert result.success, "Failed to crawl the page"

books = json.loads(result.extracted_content)

all_books.extend(books)

print(f"成功提取第 {i + 1} 页的 {len(books)} 本图书")

# 避免请求过快

await asyncio.sleep(2)

# 保存所有图书数据到文件

with open("books.json", "w", encoding="utf-8") as f:

json.dump(all_books, f, ensure_ascii=False, indent=2)

print(f"\n总共提取了 {len(all_books)} 本图书")

return all_books

if __name__ == "__main__":

asyncio.run(extract_books())

- 爬取携程景点评论

import asyncio

from crawl4ai import AsyncWebCrawler

from crawl4ai.extraction_strategy import JsonCssExtractionStrategy

import json

import time

async def main():

# 定义评论提取策略

comment_schema = {

"name": "评论提取器",

"baseSelector": ".commentItem",

"fields": [

{

"name": "content",

"selector": ".commentDetail",

"type": "text"

},

{

"name": "user",

"selector": ".userName",

"type": "text"

},

{

"name": "score",

"selector": ".score",

"type": "text"

}

]

}

extraction_strategy = JsonCssExtractionStrategy(comment_schema, verbose=True)

async with AsyncWebCrawler(

verbose=True,

javascript=True,

wait_time=15,

headers={

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Referer': 'https://you.ctrip.com/'

}

) as crawler:

try:

url = "https://you.ctrip.com/sight/nanjing9/19519.html"

session_id = "ctrip_comments_session"

print("正在获取评论数据...")

# 初始页面加载

result = await crawler.arun(

url=url,

session_id=session_id,

extraction_strategy=extraction_strategy,

wait_for="css:.commentList",

page_timeout=30000

)

all_comments = []

if result.extracted_content:

comments = json.loads(result.extracted_content)

all_comments.extend(comments)

print(f"第 1 页: 获取到 {len(comments)} 条评论")

# 只加载1页更多评论(总共2页)

result = await crawler.arun(

url=url,

session_id=session_id,

js_code="document.querySelector('.loadMore').click();",

wait_for="css:.commentList",

extraction_strategy=extraction_strategy,

page_timeout=30000,

js_only=True

)

if result.extracted_content:

comments = json.loads(result.extracted_content)

all_comments.extend(comments)

print(f"第 2 页: 获取到 {len(comments)} 条评论")

print(f"\n=== 评论统计 ===")

print(f"总共获取评论数: {len(all_comments)}条")

if all_comments:

print("\n=== 评论内容示例 ===")

for comment in all_comments[:5]: # 只显示前5条评论

print(f"\n用户: {comment.get('user', '匿名')}")

print(f"评分: {comment.get('score', 'N/A')}")

print(f"内容: {comment.get('content', '无内容')}")

# 保存所有评论到JSON文件

with open("comments.json", "w", encoding="utf-8") as f:

json.dump(all_comments, f, ensure_ascii=False, indent=2)

print("\n已保存所有评论到comments.json文件")

else:

print("未找到任何评论内容")

except Exception as e:

print(f"爬取过程出错: {str(e)}")

if __name__ == "__main__":

asyncio.run(main())

总结

crawl4ai 的出现,让 Python 爬虫开发变得更加简单高效。它以其简洁的 API、强大的功能和灵活的配置选项,成为了爬虫开发的新宠。无论是简单的数据采集,还是复杂的网页交互,crawl4ai 都能轻松应对。

如果你正在寻找一款简单易用、功能强大的爬虫库,那么 crawl4ai 绝对是你的不二之选。

完整代码和更多示例

为了让大家更深入地了解 crawl4ai 的使用方法,我已将所有示例代码上传到 GitCode,欢迎大家star:

https://gitcode.com/weixin_73790979/crawl4ai_demo/tree/main

结尾

感谢大家阅读本篇博客。希望这篇文章能够帮助您更好地了解 crawl4ai 库,并激发大家对爬虫开发的更多兴趣。让我们一起使用 crawl4ai,开启更高效的数据采集之旅!

DAMO开发者矩阵,由阿里巴巴达摩院和中国互联网协会联合发起,致力于探讨最前沿的技术趋势与应用成果,搭建高质量的交流与分享平台,推动技术创新与产业应用链接,围绕“人工智能与新型计算”构建开放共享的开发者生态。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)