论文阅读“DFA-VLA: ENHANCING ROBOTIC MANIPULATION VIA EMBODIED INTELLIGENCE“

论文阅读"DFA-VLA: enhancing robotic manipulation via embodied intelligence"

目录

摘要

With the rapid advancement of robotic hardware and software technologies, embodied intelligence has become pivotal, enabling physical agents to interact with the environment in real-time via multimodal inputs and make autonomous decisions through a closed-loop sensor-actuator system.

Among mainstream methods, end-to-end Vision-Language-Action (VLA) models efficiently execute robotic tasks by directly mapping perception to actions but suffer from critical limitations: poor modeling of fine-grained visual elements (e.g., occluded regions, small objects) and over-reliance on static cross-modal attention, restricting adaptability and generalization in complex open environments.

To address these, this paper focuses on enhancing task execution accuracy, timeliness, and generalization via embodied intelligence, with a core innovation in the Dynamic Fine-grained Alignment-based Vision-Language-Action (DFA-VLA) model built on a pre-trained large language model backbone.

It integrates two key modules: the Multi-scale Visual-Semantic Modeling (MVSM) Module, which combines a vision transformer and a segment anything model to extract high-resolution semantic features, using semantic masks to boost perception of small objects, occlusions, and cluttered backgrounds (with replaceable encoders for scene adaptation); and the Dynamic Fine-grained Alignment and Fusion (DFAF) Module, which employs mask-guided sparse dynamic attention for efficient language-visual alignment (reducing redundant computations) and a dynamic gating network (via text semantics) to adaptively switch between vision- and language-driven strategies.

Both evaluations on LIBERO benchmarks and real-world settings show that DFA-VLA outperforms state-of-the-art methods, especially in spatial reasoning and long-term tasks, with higher success rates and inference efficiency.

Parameter-efficient fine-tuning (e.g., LoRA) reduces resource use for task/hardware adaptation, while

a Sim2Real pipeline validates real-world effectiveness on physical robots, confirming improved generalization in unstructured scenarios.

结论

This work addresses critical limitations of existing Vision-Language-Action (VLA) models in robotic manipulation, particularly their poor handling of fine-grained visual elements (e.g., occlusions, small objects) and rigid cross-modal alignment.

We propose the Dynamic Fine-grained Alignment-based VLA (DFA-VLA) model, which integrates two key innovations: (1) the Multi-scale Visual-Semantic Modeling (MVSM) module, fusing high-resolution visual features with semantic masks to enhance perception of complex visual scenarios; and (2) the Dynamic Fine-grained Alignment and Fusion (DFAF) module, leveraging mask-guided sparse attention and text-semantic-driven dynamic gating to enable adaptive cross-modal alignment.

Future work will advance Vision-Language-Action (VLA) models in embodied robotics through four interconnected directions:

first, expanding experimental scenarios to include real-world tasks with high semantic ambiguity and complex combinatorial structures (e.g., ”stacking fragile objects on soft cushions” or ”sorting by color-shape rules”) to better showcase the model’s ability to link language understanding and action execution, addressing current limitations in object diversity and task complexity;

second, moving beyond incremental optimizations of existing architectures (e.g., CLIPort, OpenVLA John D, Jane S, Alice J, et al. (2023)) to explore foundational innovations, such as building unified task abstraction structures (e.g., ”task grammar trees”) and reconfiguring the perception-understanding-action pipeline around task logic, while breaking static cross-modal fusion patterns with dynamic interaction mechanisms;

third, enhancing real-world utility and human-robot collaboration by integrating large language models and active learning, enabling robots to autonomously clarify ambiguous instructions (e.g., ”Do you mean placing A into B?”) and develop self-supervised review abilities for multi-turn tasks (e.g., ”I completed the first step; what’s next?”);

and finally, exploring cross-task, cross-scene, and cross-modal generalization through multi-domain pretraining (e.g., kitchen operations, indoor cleaning) with meta-learning and multimodal self-supervision, while unifying task levels via a universal language interface to generate complete task execution graphs from single sentences.

论文概述

标题: DFA-VLA: Enhancing Robotic Manipulation via Embodied Intelligence(通过具身智能增强机器人操作)

核心贡献: 提出了一种动态细粒度对齐的VLA模型,解决现有端到端VLA模型在细粒度视觉建模和跨模态注意力机制方面的局限性。

核心问题与动机

现有VLA模型(如RT-2、OpenVLA)存在两个关键问题:

- 细粒度视觉建模不足: 难以处理遮挡区域、小物体等细节

- 静态跨模态注意力: 过度依赖固定的跨模态注意力机制,限制了在复杂开放环境中的适应性和泛化能力

方法架构

DFA-VLA包含两个核心创新模块:

1. MVSM(多尺度视觉语义建模模块)

| 组件 | 功能 | 技术细节 |

|---|---|---|

| DinoV2 | 提取全局场景特征 | 输出 14 × 14 14 \times 14 14×14 特征图,捕捉背景布局等上下文 |

| SAM | 实例分割 | 生成目标物体的边界框和掩码 |

| RoIAlign | 局部特征提取 | 从全局特征图中裁剪任务相关区域 |

| 投影层 | 特征对齐 | 将视觉特征映射到LLaMA 2的文本嵌入空间(4096维) |

数学表达:

V concat = [ Flatten ( V global ) ; V local ] ∈ R ( S 2 + k ) × d v \mathbf{V}_{\text{concat}} = [\text{Flatten}(\mathbf{V}_{\text{global}}); \mathbf{V}_{\text{local}}] \in \mathbb{R}^{(S^2+k) \times d_v} Vconcat=[Flatten(Vglobal);Vlocal]∈R(S2+k)×dv

2. DFAF(动态细粒度对齐与融合模块)

关键创新:

- 掩码引导的稀疏动态注意力: 只关注Top-K相关视觉区域,减少冗余计算

- 动态门控网络: 根据文本语义自适应切换视觉驱动和语言驱动策略

计算流程:

α = σ ( W ⋅ Mean ( H text ) ) \alpha = \sigma(W \cdot \text{Mean}(H_{\text{text}})) α=σ(W⋅Mean(Htext))

V sparse = TopK ( cos ( H text , V proj ) ) V_{\text{sparse}} = \text{TopK}(\cos(H_{\text{text}}, \mathbf{V}_{\text{proj}})) Vsparse=TopK(cos(Htext,Vproj))

F = α ⊙ V sparse + ( 1 − α ) ⊙ Broadcast ( Mean ( H text ) ) F = \alpha \odot V_{\text{sparse}} + (1-\alpha) \odot \text{Broadcast}(\text{Mean}(H_{\text{text}})) F=α⊙Vsparse+(1−α)⊙Broadcast(Mean(Htext))

设计特点: 前3层Transformer使用DFAF进行细粒度对齐,4-32层专注于高层任务规划。

实验设置

数据集

| 数据集 | 用途 | 任务类型 |

|---|---|---|

| LIBERO | 主要基准测试 | Spatial/Object/Goal/Long四类任务 |

| BridgeData V2 | 预训练 | 22个任务,5种泛化能力测试 |

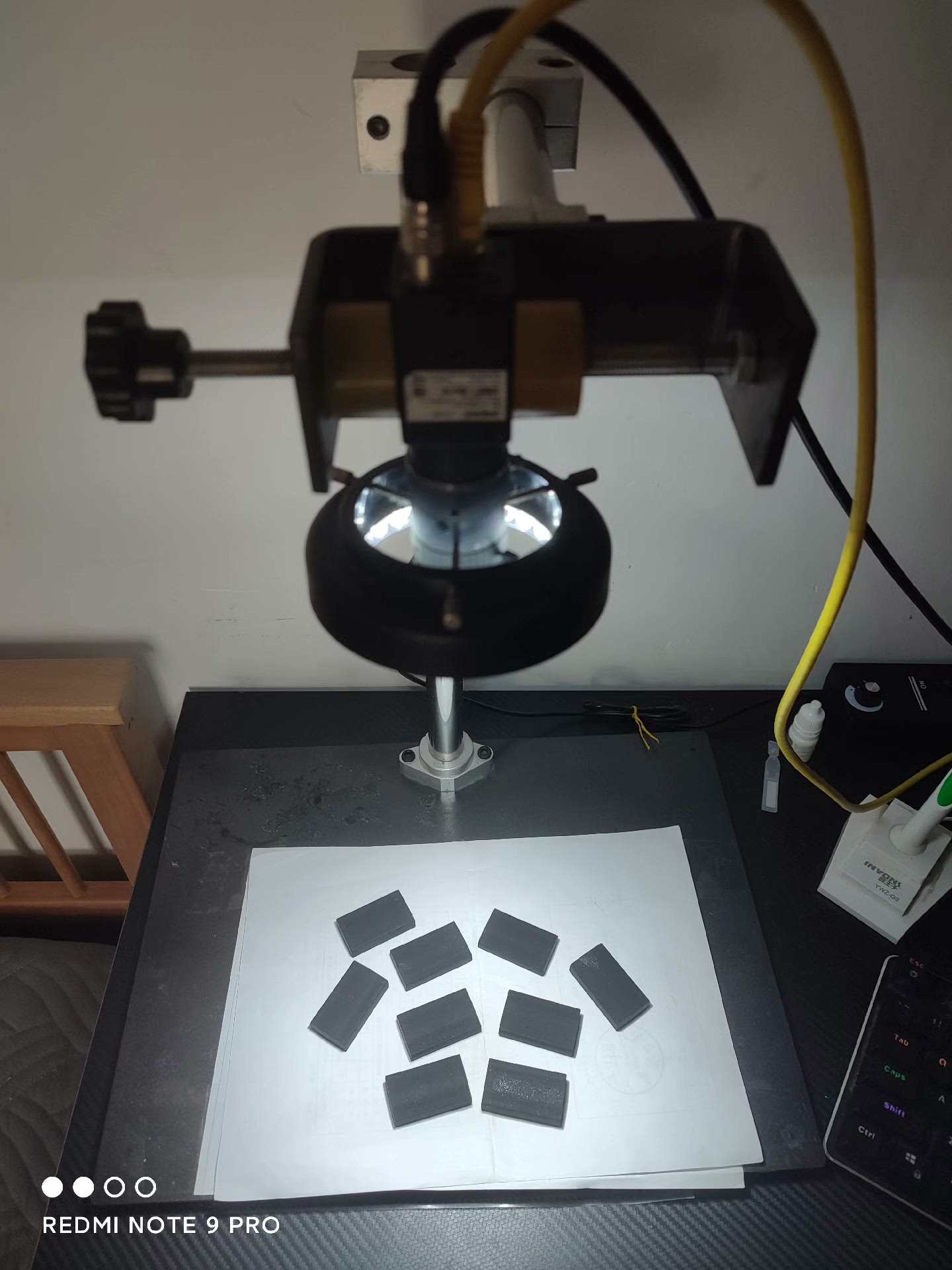

硬件平台

- 仿真: LIBERO模拟器

- 真实机器人: 智昌CR5、乐白LB-10(6自由度机械臂)

- 传感器: Intel RealSense D435i、GoPro相机

训练优化技术

- LoRA参数高效微调

- INT8量化推理

- FlashAttention + FSDP多GPU并行

- 自动混合精度(AMP)

实验结果

LIBERO基准测试(表1)

| 方法 | Spatial SR | Object SR | Goal SR | Long SR | 平均SR | 平均Rank |

|---|---|---|---|---|---|---|

| Diffusion Policy | 78.3% | 92.5% | 68.3% | 50.5% | 72.4% | 2.5 |

| Octo | 78.9% | 85.7% | 84.6% | 51.1% | 75.1% | 2.0 |

| OpenVLA | 84.7% | 88.4% | 79.2% | 53.7% | 76.5% | 1.5 |

| DFA-VLA | 85.5% | 89.8% | 80.2% | 55.5% | 77.8% | 1.25 |

关键发现: DFA-VLA在所有任务上均达到SOTA,尤其在长程任务(Long)上提升显著(+1.8%)。

消融实验(表3)

| ID | MVSM | DFAF | SR | Rank | 延迟 | 参数量 |

|---|---|---|---|---|---|---|

| 1 | ✗ | ✗ | 82.3% | 3.1 | 55ms | 78.5M |

| 2 | ✓ | ✗ | 84.7% | 1.9 | 62ms | 146.3M |

| 3 | ✗ | ✓ | 84.0% | 2.3 | 58ms | 83.2M |

| 4 | ✓ | ✓(无门控) | 85.1% | 1.5 | 60ms | 149.6M |

| 5 | ✓ | ✓(完整) | 85.5% | 1.2 | 60ms | 151.2M |

结论:

- MVSM单独提升2.4%(空间理解能力)

- DFAF单独提升1.7%(特征聚合效率)

- 两者结合+门控机制达到最佳性能

超参数分析(表2)

| 参数 | 最优值 | 关键发现 |

|---|---|---|

| λ 1 \lambda_1 λ1 (对齐损失权重) | 0.5 | 过高会导致过正则化 |

| λ 2 \lambda_2 λ2 (掩码损失权重) | 0.5 | 与 λ 1 \lambda_1 λ1协同最优 |

| 输入分辨率 | 224×224 | 512×512仅提升0.1%但延迟+20% |

| Top-K | 5 | 平衡精度与效率 |

| 门控机制 | 可学习α | 比固定值提升2.1-2.6% |

真实世界实验(图3 & 表4)

在BridgeData V2的22个真实任务上:

- 平均成功率: 71.1%(对比OpenVLA的70.6%)

- 语言理解任务表现突出(90% vs 85%)

- 在视觉泛化、物理泛化等类别均有提升

论文亮点与创新

- 细粒度感知: 首次将SAM分割与DinoV2特征有效结合,解决小物体和遮挡问题

- 动态计算: 稀疏注意力+门控机制实现自适应计算分配

- 实用部署: 完整的Sim2Real流程,验证真实机器人有效性

- 效率优化: 60ms推理延迟,适合实时控制

局限性与未来工作

当前局限:

- 物体多样性仍有限

- 任务复杂度有待提升

- 未见复杂组合结构任务(如"将易碎物体放在软垫上")

未来方向(论文第6节):

- 扩展到高语义模糊性和复杂组合结构任务

- 构建统一任务抽象结构(如"任务语法树")

- 集成大语言模型实现主动学习和多轮对话

- 跨任务、跨场景、跨模态泛化

DAMO开发者矩阵,由阿里巴巴达摩院和中国互联网协会联合发起,致力于探讨最前沿的技术趋势与应用成果,搭建高质量的交流与分享平台,推动技术创新与产业应用链接,围绕“人工智能与新型计算”构建开放共享的开发者生态。

更多推荐

已为社区贡献12条内容

已为社区贡献12条内容

所有评论(0)