【SeNet模块学习】结合MNIST手写数据识别任务学习

SeNet模块基础学习

·

前置图像分类入门任务MNIST手写数据识别:【图像分类入门】MNIST手写数据识别-CSDN博客

1.SeNet模块

class SENet_Layer(nn.Module):

def __init__(self, channel, reduction=16): # 默认r为16

super(SENet_Layer, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1) # 自适应平均池化层,输出大小1*1

self.fc = nn.Sequential(

nn.Linear(channel, channel//reduction),

nn.ReLU(),

nn.Linear(channel//reduction, channel),

nn.Sigmoid(), # 将通道权重输出为0-1

)

def forward(self, x):

b, c, _, _ = x.size() # 输入的数据x为四维,提取批次数量和通道数c

y = self.avg_pool(x).view(b, c) # 经过池化层(挤压层)输出为b*c*1*1,展平为b*c以经过之后的全连接层(激励层)

y = self.fc(y).view(b, c, 1, 1) # 生成通道权重,输出恢复为原思维结构以供乘积

return x * y.expand_as(x) # 对应元素进行逐一相乘上述即为SeNet层的基础训练框架,首先通过全局平均池化层(挤压)将图像宽和高压缩至1*1;紧接的激励层包括两个全连接层,第一个全连接层将输入维度降低到C/r,以平衡模型复杂性、提升计算效率、加强特征抽象和提升注意力机制效果等,第一个全连接层使用ReLU激活函数增加非线性;再经过第二个全连接层恢复通道维度为C,利用sigmoid激活函数将最终输出的通道权重防缩到0-1之间。

2.完整训练模块

class CNN(nn.Module): # 训练模型

def __init__(self):

super(CNN, self).__init__()

# 初始1*28*28

self.layer01 = nn.Sequential(

# 1.卷积操作 卷积层(h2=w2=(28-5+2*2)/1+1=28)

nn.Conv2d(in_channels=1, out_channels=64, kernel_size=5, stride=1, padding=2, bias=True),

# 2.归一化操作BN层

nn.BatchNorm2d(64),

# 3.激活层 使用Relu

nn.ReLU(inplace=True),

# 4.最大池化

nn.MaxPool2d(2)

) # 经过layer0维度变为64*14*14

self.Se_layer01 = SENet_Layer(64)

self.dropout = nn.Dropout(p=0.5) # 丢弃

self.layer02 = nn.Sequential(

# 1.卷积操作 卷积层(h3=w3=(14-3+1*2)/1+1=14)

nn.Conv2d(64, 32, kernel_size=3, stride=1, padding=1, bias=True),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(2)

) # 经过layer0维度变为32*7*7

self.Se_layer02 = SENet_Layer(32)

self.fc = torch.nn.Linear(in_features=7 * 7 * 32, out_features=10) # b*10

def forward(self, x):

x = self.layer01(x)

x = self.Se_layer01(x)

x = self.dropout(x)

x = self.layer02(x)

x = self.Se_layer02(x)

x = x.view(x.size()[0], -1) # 将图像数据展开成一维的

x = self.fc(x)

return x完整的训练模块如上所示,在原两个卷积层后添加SeNet层,由于MNIST这样的简单手写数字识别任务,使用较简单的卷积网络就可以达到非常好的结果,过于复杂化神经网络框架反而可能能导致过拟合等问题,所以期间加入了丢弃层,在训练集较小时防止过拟合。

3.完整代码

import numpy as np

import torch

import torchvision.datasets as dataset

import torchvision.transforms as transforms

import torch.nn as nn

from torch.utils.data import DataLoader, random_split

import matplotlib.pyplot as plt

import time

class SENet_Layer(nn.Module): # SeNet模块

def __init__(self, channel, reduction=16): # 默认r为16

super(SENet_Layer, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1) # 自适应平均池化层,输出大小1*1

self.fc = nn.Sequential(

nn.Linear(channel, channel//reduction),

nn.ReLU(),

nn.Linear(channel//reduction, channel),

nn.Sigmoid(), # 将通道权重输出为0-1

)

def forward(self, x):

b, c, _, _ = x.size() # 输入的数据x为四维,提取批次数量和通道数c

y = self.avg_pool(x).view(b, c) # 经过池化层(挤压层)输出为b*c*1*1,展平为b*c以经过之后的全连接层(激励层)

y = self.fc(y).view(b, c, 1, 1) # 生成通道权重,输出恢复为原思维结构以供乘积

return x * y.expand_as(x) # 对应元素进行逐一相乘

class CNN(nn.Module): # 训练模型

def __init__(self):

super(CNN, self).__init__()

# 初始1*28*28

self.layer01 = nn.Sequential(

# 1.卷积操作 卷积层(h2=w2=(28-5+2*2)/1+1=28)

nn.Conv2d(in_channels=1, out_channels=64, kernel_size=5, stride=1, padding=2, bias=True),

# 2.归一化操作BN层

nn.BatchNorm2d(64),

# 3.激活层 使用Relu

nn.ReLU(inplace=True),

# 4.最大池化

nn.MaxPool2d(2)

) # 经过layer0维度变为64*14*14

self.Se_layer01 = SENet_Layer(64)

self.dropout = nn.Dropout(p=0.5) # 丢弃

self.layer02 = nn.Sequential(

# 1.卷积操作 卷积层(h3=w3=(14-3+1*2)/1+1=14)

nn.Conv2d(64, 32, kernel_size=3, stride=1, padding=1, bias=True),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(2)

) # 经过layer0维度变为32*7*7

self.Se_layer02 = SENet_Layer(32)

self.fc = torch.nn.Linear(in_features=7 * 7 * 32, out_features=10) # b*10

def forward(self, x):

x = self.layer01(x)

x = self.Se_layer01(x)

x = self.dropout(x)

x = self.layer02(x)

x = self.Se_layer02(x)

x = x.view(x.size()[0], -1) # 将图像数据展开成一维的

x = self.fc(x)

return x

def train_val(train_loader, val_loader, device, model, loss, optimizer, epochs, save_path): # 正式训练函数

model = model.to(device)

plt_train_loss = [] # 训练过程loss值,存储每轮训练的均值

plt_train_acc = [] # 训练过程acc值

plt_val_loss = [] # 验证过程

plt_val_acc = []

max_acc = 0 # 以最大准确率来确定训练过程的最优模型

for epoch in range(epochs): # 开始训练

train_loss = 0.0

train_acc = 0.0

val_acc = 0.0

val_loss = 0.0

start_time = time.time()

model.train()

for index, (images, labels) in enumerate(train_loader):

images, labels = images.cuda(), labels.cuda()

optimizer.zero_grad() # 梯度置0

pred = model(images)

bat_loss = loss(pred, labels) # 算交叉熵loss

bat_loss.backward() # 回传梯度

optimizer.step() # 更新模型参数

train_loss += bat_loss.item()

# 注意此时的pred结果为64*10的张量(概率分布)

pred = pred.argmax(dim=1) # 返回给定维度1上最大值的索引

train_acc += (pred == labels).sum().item()

print("当前为第{}轮训练,批次为{}/{},该批次总loss:{} | 正确acc数量:{}"

.format(epoch+1, index+1, len(train_data)//config["batch_size"],

bat_loss.item(), (pred == labels).sum().item()))

# 计算当前Epoch的训练损失和准确率,并存储到对应列表中:

plt_train_loss.append(train_loss / train_loader.dataset.__len__())

plt_train_acc.append(train_acc / train_loader.dataset.__len__())

model.eval() # 模型调为验证模式

with torch.no_grad(): # 验证过程不需要梯度回传,无需追踪grad

for index, (images, labels) in enumerate(val_loader):

images, labels = images.cuda(), labels.cuda()

pred = model(images)

bat_loss = loss(pred, labels) # 算交叉熵loss

val_loss += bat_loss.item()

pred = pred.argmax(dim=1)

val_acc += (pred == labels).sum().item()

print("当前为第{}轮验证,批次为{}/{},该批次总loss:{} | 正确acc数量:{}"

.format(epoch+1, index+1, len(val_data)//config["batch_size"],

bat_loss.item(), (pred == labels).sum().item()))

val_acc = val_acc / val_loader.dataset.__len__()

if val_acc > max_acc:

max_acc = val_acc

torch.save(model, save_path)

plt_val_loss.append(val_loss / val_loader.dataset.__len__())

plt_val_acc.append(val_acc)

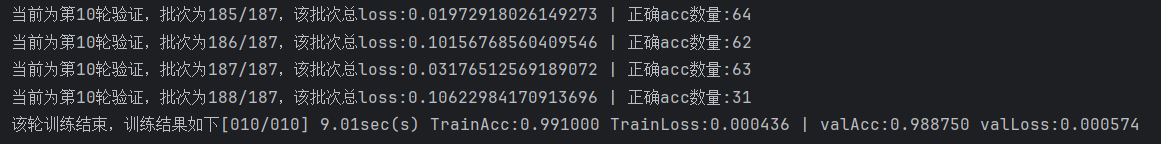

print('该轮训练结束,训练结果如下[%03d/%03d] %2.2fsec(s) TrainAcc:%3.6f TrainLoss:%3.6f | valAcc:%3.6f valLoss:%3.6f \n\n'

% (epoch+1, epochs, time.time()-start_time, plt_train_acc[-1], plt_train_loss[-1], plt_val_acc[-1], plt_val_loss[-1]))

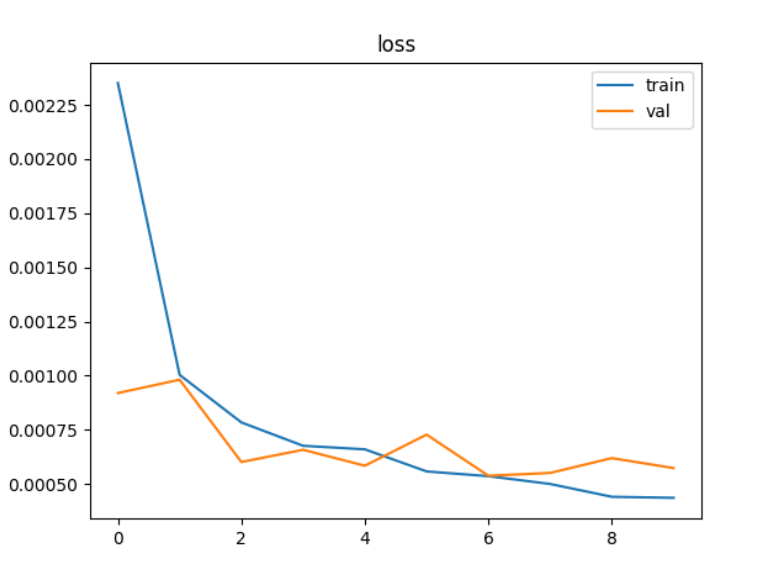

plt.plot(plt_train_loss) # 画图

plt.plot(plt_val_loss)

plt.title('loss')

plt.legend(['train', 'val'])

plt.show()

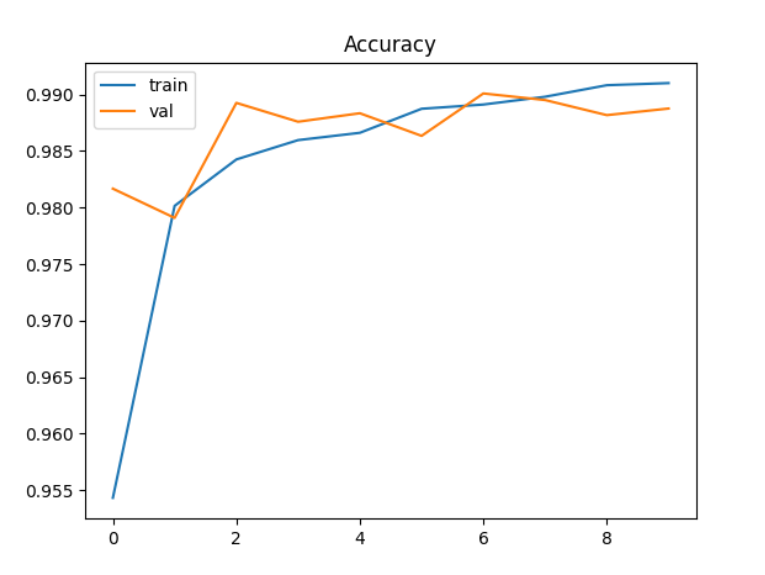

plt.plot(plt_train_acc)

plt.plot(plt_val_acc)

plt.title('Accuracy')

plt.legend(['train', 'val'])

# plt.savefig('./acc.png')

plt.show()

def test(save_path, test_loader, device, loss): # 测试函数

best_model = torch.load(save_path).to(device)

test_loss = 0.0

test_acc = 0.0

start_time = time.time()

with torch.no_grad():

for index, (images, labels) in enumerate(test_loader):

images, labels = images.cuda(), labels.cuda()

pred = best_model(images)

bat_loss = loss(pred, labels) # 算交叉熵loss

test_loss += bat_loss.item()

pred = pred.argmax(dim=1)

test_acc += (pred == labels).sum().item()

print("正在最终测试:批次为{}/{},该批次总loss:{} | 正确acc数量:{}"

.format(index + 1, len(test_data) // config["batch_size"],

bat_loss.item(), (pred == labels).sum().item()))

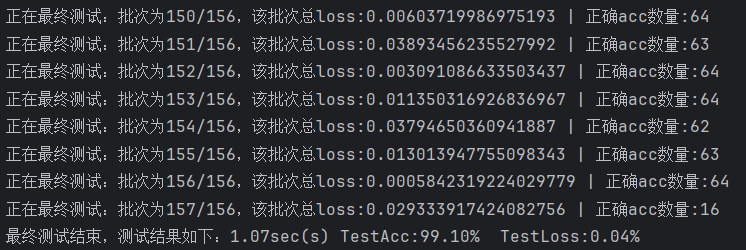

print('最终测试结束,测试结果如下:%2.2fsec(s) TestAcc:%.2f%% TestLoss:%.2f%% \n\n'

% (time.time() - start_time, test_acc/test_loader.dataset.__len__()*100, test_loss/test_loader.dataset.__len__()*100))

# 加载MNIST数据

ori_data = dataset.MNIST(

root="./data",

train=True,

transform=transforms.ToTensor(),

download=True

)

test_data = dataset.MNIST(

root="./data",

train=False,

transform=transforms.ToTensor(),

download=True

)

# print(ori_data)

# print(test_data)

# 查看某一样本数据

# image, label = ori_data[0]

# print(f"Image shape: {image.shape}, Label: {label}")

# image = image.squeeze().numpy()

# plt.imshow(image)

# plt.title(f'Label: {label}')

# plt.show()

config = {

"train_size_perc": 0.8,

"batch_size": 64,

"learning_rate": 0.01,

"epochs": 10,

"save_path": "model_save/best_model.pth"

}

# 设置训练集和验证集的比例

train_size = int(config["train_size_perc"] * len(ori_data)) # 80%用于训练

val_size = len(ori_data) - train_size # 20%用于验证

# 使用random_split划分数据集

train_data, val_data = random_split(ori_data, [train_size, val_size])

train_loader = DataLoader(dataset=train_data, batch_size=config["batch_size"], shuffle=True)

val_loader = DataLoader(dataset=val_data, batch_size=config["batch_size"], shuffle=False)

test_loader = DataLoader(dataset=test_data, batch_size=config["batch_size"], shuffle=True)

# print(f'Training Set Size: {len(train_loader.dataset)}')

# print(f'Validation Set Size: {len(val_loader.dataset)}')

# print(f'Testing Set Size: {len(test_loader.dataset)}')

model = CNN()

loss = nn.CrossEntropyLoss() # 交叉熵损失函数

optimizer = torch.optim.Adam(model.parameters(), lr=config["learning_rate"]) # 优化器

# optimizer = torch.optim.AdamW(model.parameters(), lr=0.01, weight_decay=1e-4)\

device = "cuda" if torch.cuda.is_available() else "cpu"

# print(device)

train_val(train_loader, val_loader, device, model, loss, optimizer, config["epochs"], config["save_path"])

test(config["save_path"], test_loader, device, loss)4.训练结果

结果准确率仍在99%左右,无极大明显提升 。

无SeNet模块的MNIST手写数据识别任务:【图像分类入门】MNIST手写数据识别-CSDN博客

DAMO开发者矩阵,由阿里巴巴达摩院和中国互联网协会联合发起,致力于探讨最前沿的技术趋势与应用成果,搭建高质量的交流与分享平台,推动技术创新与产业应用链接,围绕“人工智能与新型计算”构建开放共享的开发者生态。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)